Challenging Core Assumptions, Tech Backlash Paves The Way for More Thoughtful HealthTech

David Shaywitz

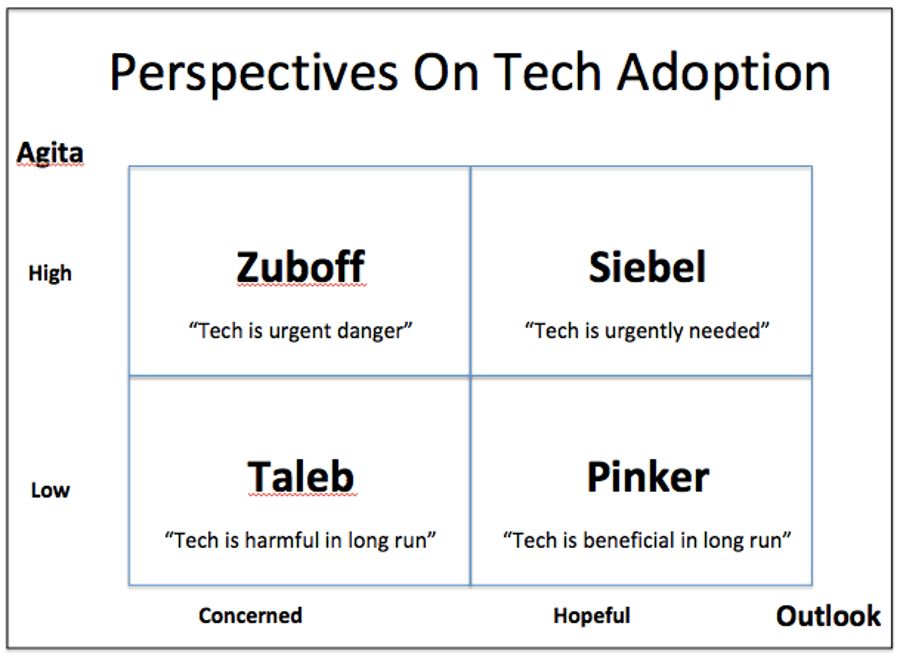

Digital transformation (as I recently discussed), and the implementation of emerging technologies more generally, is routinely pitched by enthusiasts like Tom Siebel as both urgent and inevitable, something organizations need to embrace or risk irrelevance, if not extinction.

Yet the “embrace or die” assertion is under increasing, and healthy, scrutiny, as the “techlash” (technology backlash) gains steam.

“Surveillance Capitalism”: Tech As Force For Harm

Voices of concern have started to coalesce under the banner of what Harvard Business School professor emerita Shoshana Zuboff has termed “surveillance capitalism.” She synthesized and amplified this growing concern in her 700+ page 2019 book The Age of Surveillance Capitalism. For a shorter summary, I recommend reading this recent New York Times essay by Zuboff, and listening to this especially informative interview with her conducted by distinguished technology journalist Kara Swisher (of Recode and the Times).

The core of Zuboff’s critique can be found in the story of Google itself, a company that (as described in the Recode podcast) initially came to prominence by building a phenomenally effective search engine that users appreciated. But the company struggled to make money in the early days, and “very swanky venture capitalists were threatening to withdraw support,” according to Zuboff. In an existential panic, Google apparently realized that it was sitting on a huge amount of interesting data, far more than was needed to improve the search algorithm.

At its inception, reports Zuboff, Google had rejected online advertising as a “disfiguring force both in general on the internet and specifically for their search engine.”

But spurred by the threat of extinction, Zuboff explains, Google declared a “State of Exception,” akin to a state of emergency, that “suspended principles” and permitted the company to contemplate previously shunned approaches. They recognized they had accumulated “collateral behavioral data that was left over from people’s searching and browsing behavior,” data that had been set aside, and considered waste. But upon further review, says Zuboff, Google engineers realized there was great predictive power in the combination of this data exhaust plus computation: the ability to predict a piece of future behavior — in this case, where someone is likely to click — and sell this information to advertisers.

The result, according to Zuboff, was a radical transformation of online advertising, turning it into a market “trading in behavioral futures,” while claiming “private human experience” in the process. “We thought that we search Google,” writes Zuboff, “but now we understand that Google searches us.”

As this model caught on, Zuboff explains, tech companies accrued exceptional influence, due to “extreme asymmetries of knowledge and power.” Over time, these companies began to “seize control of information and learning itself.”

These technology companies, asserts Zuboff, “rely on psychic numbering and messages of inevitability to conjure the helplessness, resignation, and confusion that paralyze their pray.” She argues “the most treacherous hallucination of them all” is “the belief that privacy is private.” It’s not, she argues, because “the effectiveness of … private or public surveillance and control systems depends upon the pieces of ourselves that we give up – or that are secretly stolen from us.”

Notably, Swisher strongly shares these privacy concerns, even writing a year-end commentary in the Times last December entitled “Be Paranoid About Privacy,” urging us to “take back our privacy from tech companies – even if that means sacrificing convenience.” She writes, “We trade the lucrative digital essence of ourselves for much less in the form of free maps or nifty games or compelling communications apps.” Adds Swisher, “It’s up to us to protect ourselves.”

(In contrast to some health tech execs I know, Swisher views Europe’s General Data Protection Regulation [GDPR] and California’s recently-enacted Consumer Privacy Act as positive developments.)

Both Siebel and Zuboff seem to agree on the power of the emerging technology. They vehemently disagree about whether it’s a force for good or ill.

The Pinker Perspective: Cautious Optimism

But another perspective is that both Siebel and Zuboff overstate at least the near-term power and utility of technology by accepting as a given that the impetus to collect every possible piece of data about every possible thing will soon result in remarkably precise predictions.

This is what Siebel promises, and Zuboff fears.

In contrast, I found myself agreeing with the more grounded viewpoint Harvard psychologist Steven Pinker offered in a 2019 discussion with Sapiens author Yuval Noah Harari (who was making the case for surveillance capitalism).

In recent years, Pinker has attracted controversy by arguing (in his 2018 book Enlightenment Now, and elsewhere) that despite endless lamentations and prophecies of doom, life is actually getting better, and is on a trajectory to improve still more.

Besides Pinker, this encouraging perspective has been recently discussed by a number of authors including Hans Rosling (Factfulness), Andrew MacAfee (The Second Machine Age, More From Less – my Wall Street Journal review here), and John Tierney and Roy Baumeister (The Power of Bad – my Wall Street Journal review here).

Pinker says he’s not losing sleep about emerging technologies, in large part because he suspects the rate and extent of technological progress has been significantly overstated. Consider human genetic engineering, he says, where frightening concerns had been raised about engineering people with a gene that made them smarter or better athletes. That turned out to be a wild oversimplification, he argues – many genes impact most traits, and since genes tend to be involved in many functions, there’s a good chance any intervention would do at least as much harm as good. The limitations of genetic data is also something Denny Ausiello and I anticipated in this 2000 New York Times “Week in Review” commentary, and something Andreessen-Horowitz partner Jorge Conde thoughtfully reflects on in this recent a16z podcast.

Returning to AI, Pinker notes that “predicting human behavior based on algorithms” is “not a new idea,” nor one likely to immediately destroy the planet. “I suspect,” Pinker says, “we’ll have more time than we think simply because even if the human brain is a physical system, which I believe it is, it’s extraordinary complex, and we’re nowhere close to being able to micromanage it even with artificial intelligence algorithms. The AI algorithms are very good at playing video games and captioning pictures, but they are often quite stupid when it comes to low probability combinations of events that they haven’t been trained on… even the simple problems turn out to be harder than we think.”

He adds, “When it comes to hacking human behavior – it’s all the more complex. Not because there’s anything mystical or magic about the human brain – it’s an organ – but an organ that ‘s subject to fantastic non-linearities and chaos and unpredictability and the algorithm that will control our behavior isn’t going to be arriving any time soon.”

In a 2018 op-ed, Pinker notes the “vast incremental progress the world has enjoyed in longevity, healthy, wealthy, and education,” and adds that technology “is not the reason that our species must some day face the Grim Reaper. Indeed, technology is our best hope for cheating death, at least for a while.”

He describes threats such as “the possibility that we will be annihilated by artificial intelligence” as “the 21st century version of the Y2K bug,” which was associated with apocalyptic prophesies, yet ultimately had negligible impact.

In a particularly interesting exchange between Harari and Pinker, Harari expressed concern that the surveillance state was turning our lives into a continuous, extremely stressful job interview, suggesting we’re heading to the point where everything we do every moment of our lives could be surveilled, recorded, and analyzed in a way that could impact future employment.

Pinker, in response, noted that “One of the most robust findings in psychology is that actuarial decision making – statistical decision making — is more reliable than human intuition, clinical decision making. We’ve known this for 70 years but we typically don’t do what would be more rational.” In this example, it would be rational to scrap job interviews, and use statistically-informed predictors instead. Even though we know job interviews are subject to bias and error, Pinker points out, we still use them, and don’t “hand it over to algorithms.”

Of course, many technophiles – and technophobes — would say this is exactly what’s already occurring.

The Taleb Quadrant

There’s actually a fourth quadrant to consider – which I think of as represented by Nassim Taleb, who is critical (as he articulates with particular clarity in Antifragile) of what he sees as our worship of new technology, not because he fears it’s about to immediately lead to the end of life as we know it, but rather because he thinks our increased interconnectivity places us at greater risk of a catastrophic failure – i.e. make us far more fragile. He trusts approaches that have stood the test of time “things that have been around, things that have survived,” and worries about our “neomania – the love of the modern for it’s own sake.”

Implications for Health Tech

While perhaps inconvenient for some health tech entrepreneurs in the short term, the increasingly robust discussion about the impact of technology represents a positive development for the field.

Why positive? Because it creates the intellectual space needed to challenge tech assertions and assumptions, while demanding rigorous proofs of value.

I incline towards Pinker’s perspective. Technology, in my view, offers us real hope in our efforts to maintain health and forestall and combat illness. Figuring out how to derive meaningful benefit from the technology will not be nearly as easy nor as rapid as consultants promise. As we work through these challenges, we need to be thoughtful and deliberate, and consider the right kind of guardrails we want to put in place as we bring ever-more powerful technologies to bear in our healthcare system. The hurdles we must clear – technological, social and political in nature – as we create systems that can meaningfully intervene and improve upon what we have in healthcare are enormous. We would be foolish to underestimate the work ahead – and even more foolish not to embrace the challenges and get going.